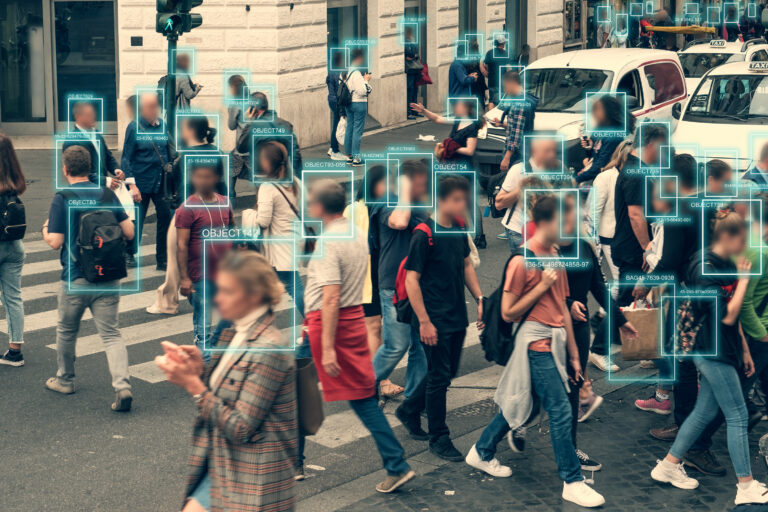

It is hard to imagine feeling truly safe anywhere in the world today. But it seems increasingly plausible that an even less secure world awaits us as artificial intelligence (AI) seeks to take over traditional human spaces. Though we often associate the rise of AI with doomsday scenarios, some of the threats they pose may be less apocalyptic and more familiar. In the age of #MeToo and #BlackLivesMatter movements which are rapidly revealing inequities in our society, AI technology has been lambasted for employing algorithms that reinforce racist and biased stereotypes. How can we break the bias before it’s too late?

While AI has improved health outcomes by actively diagnosing disease and making treatment faster and more effective in clinical settings, it has also compounded existing inequities across health systems in regard to socioeconomic status, race, ethnic background, religion, gender, disability, and sexual orientation. For example, diagnostic datasets compiled using AI for detection and treatment of various health conditions can prioritize white patients based on how these systems have been trained, i.e., facial recognition, and therefore discriminate against black and brown people.

The COVID-19 crisis underscores just how powerful AI is when it comes to our health and well-being. In recent months, social media companies have been rightly blamed for using AI-powered algorithms to target users and send them tailored content that will reinforce their prejudices and misunderstanding of the pandemic and the necessary health measures needed to combat it. Mistrust and infodemics have spiralled out of control in local communities and have led to the dissemination of misinformation and misdiagnosis. As a result, evidence-based science and data have failed to reach many within the most vulnerable and isolated groups, which has increased infection rates.

It is, therefore, urgently necessary to dissect these rapidly developing technologies in order to evaluate and mitigate rising health burdens more holistically and inclusively.

Moreover, AI is built by humans ingrained in colonial systems and institutions marked by historical exploitation and bias which greatly skew the outcomes the AI is asked to predict. AI can also have widely varied accuracy rates when different demographics and representations are added to the equation.

To address above mentioned evolving and frontline issues, the think and do tank Geneva Macro Labs (GEMLABS) will host on March 9, 18:00 CET the interactive session “AI and health. How to break the bias before it’s too late”, in commemoration of International Women’s Day ( Breakthebias). We will explore the specific biases in AI health algorithms and how to tackle these inequities. We will also examine how AI technology shapes our virtual IDs, perceptions about who we are, and inevitably, the ways we will receive and access health services as we lean harder on technological tools to respond more rapidly to health crises. Two special guest speakers are invited:

The Greenlining Institute’s CEO, Debra Gore- Mann, will highlight solutions to end algorithmic discrimination and build more equitable automated decision systems by focusing on algorithmic transparency and accountability.

Nigerian Dr Ayomide Owoyemi, a health informatics specialist based in Chicago will underscore the links between inclusive and trusted digital technology in health and development.

As a part of its goal to improve governance and management of AI technologies and to provide solutions, GEMLABS will identify key takeaways of this interactive and engaging session.

To be clear, AI has the potential to improve health outcomes around the world. The problem is the rapid, lawless, and deregulated development of AI tools that have bypassed international standards. We could do much more to ensure that the technology has a strong anti-racist, anti-bias ethical base, which encompasses the rule of law, and the promotion of human rights and dignity for all.

We have a way forward. We must engender and diversify experts in data science in order to reduce racial and gender bias. We must also create and reinforce regulations and international rules and codes of conduct governing the use of AI. And most importantly, we must work collectively to provide these solutions. Join us!